Background knowledge of image processing

What is a camera?

We have all seen various cameras, such as

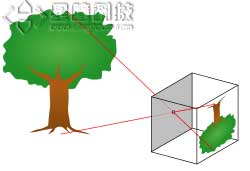

What is a camera? In the final analysis, it is a device that converts optical signals into electrical signals. In computer vision, the simplest camera model is the pinhole imaging model:

The pinhole model is an ideal camera model that does not consider the field curvature, distortion and other problems that exist in actual cameras. However, in actual use, these problems can be solved by introducing distortion parameters during the calibration process, so the pinhole model is still the most widely used camera model.

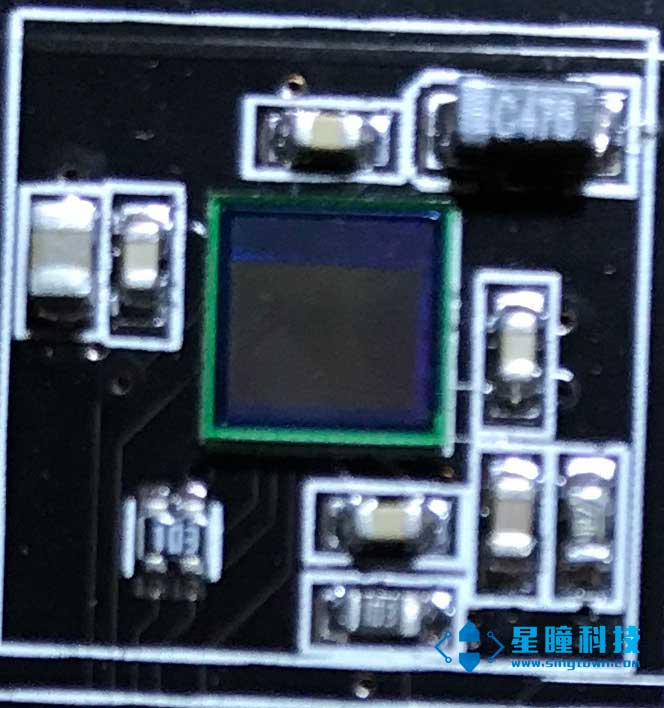

The image passes through the lens and shines on a photosensitive chip. The photosensitive chip can convert information such as the wavelength and intensity of the light into a digital signal that can be recognized by the computer (digital circuit). The photosensitive element looks like this:

(The square element in the middle is the photosensitive element)

What are pixels and resolution?

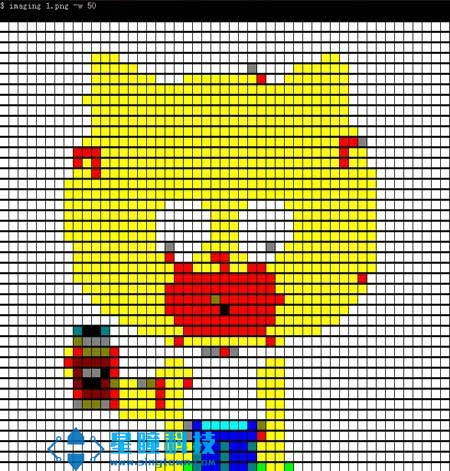

The photosensitive element is composed of many photosensitive points, such as 640480 points, each point is a pixel, and the pixels of each point are collected and sorted out to form a picture, so the resolution of this picture is 640480:

What is frame rate

The frame rate (FPS) is the number of pictures processed per second. If it exceeds 20 frames, the human eye can hardly distinguish the freeze. Of course, if used on a machine, the higher the frame rate, the better. The maximum frame rate comparison of OpenMV:\ Note: No mark means that the image is not transmitted to the IDE, because this process is very time-consuming.

What is color

Physically, color is electromagnetic waves of different wavelengths.\

\

However, according to the visual effect of the human eye, the color of visible light can be described by RGB, CMYK, HSB, and LAB color gamuts.

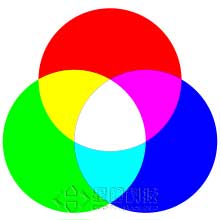

RGB primary colors

The principle of the three primary colors is not due to physical reasons, but due to human physiological reasons. There are several cone photoreceptors in the human eye that distinguish colors, which are most sensitive to yellow-green, green and blue-purple (or violet) light (wavelengths of 564, 534 and 420 nanometers, respectively).

So RGB is often used on monitors to display pictures.

LAB brightness-contrast

In the Lab color space, L brightness; a positive number represents red, negative end represents green; b positive number represents yellow, negative end represents blue. Unlike RGB and CMYK color spaces, Lab color is designed to be close to human vision.

Therefore, the L component can adjust the brightness pair and modify the output color levels of a and b components to make accurate color balance.

Note: In the algorithm for finding color blocks in OpenMV, this LAB mode is

used!

Choice of light source

If your machine is in industry, or a device that runs for 24 hours, it is vital to maintain a stable light source, especially in color algorithms. Once the brightness changes, the value of the entire color will change greatly!

To be added

Focal length of the lens

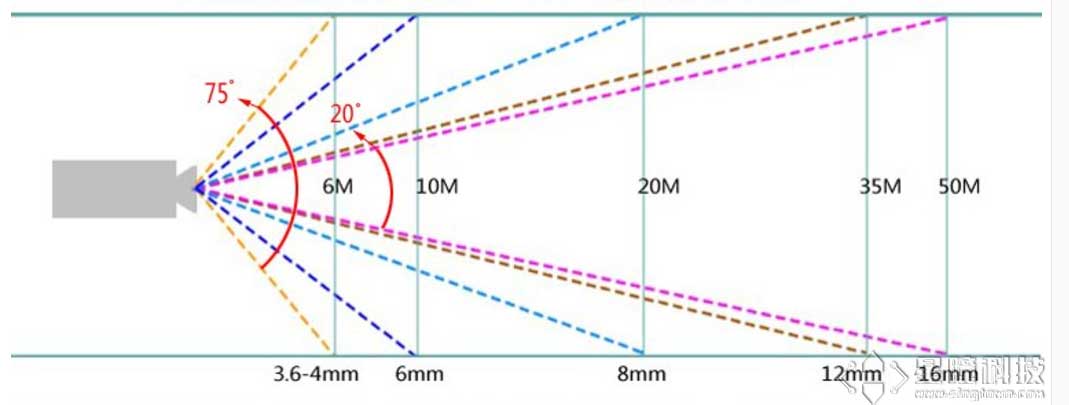

Because the image is refracted by the lens and shines on the photosensitive element. Then the lens determines the size and distance of the entire picture. One of the most important parameters is the focal length.

Focal length of the lens: refers to the distance from the main point of the lens

to the focus after optics, which is an important performance indicator of the

lens. The length of the focal length of the lens determines the size of the

image, the size of the field of view, the size of the depth of field and the

perspective of the picture. When shooting the same subject at the same distance,

the image formed by the lens with a long focal length is large, and the image

formed by the lens with a short focal length is small. Note that the longer the

focal length, the smaller the angle of view.\

Another point is the distortion of the lens. Due to the principle of optics, different positions on the photosensitive chip have different distances from the lens. Simply put, the closer the image is, the smaller the image is. Therefore, a fisheye effect (barrel distortion) will appear at the edge. To solve this problem, you can use an algorithm to correct the distortion in the code. Note: OpenMV uses image.lens_corr(1.8) to correct the lens with a focal length of 2.8mm. You can also use a distortion-free lens directly. The distortion-free lens has an additional correction lens part, so the price will naturally be much higher.

Below is a comparison of lenses with different focal lengths when the OpenMV is

about 20cm away from the desktop\

Lens filter

There is usually a filter on the lens.

What does this filter do?

We know that different colors of light have different wavelengths. In a normal environment, in addition to visible light, there is also a lot of infrared light. In night vision, infrared light is used.

However, in normal color applications, infrared light is not needed, because infrared light will also react to the photosensitive element, making the entire picture white. So we put a filter on the lens that can only pass wavelengths within 650nm to cut off the infrared light.